advertisement

Why data-driven businesses need a data catalog

Relational databases, data lakes, and NoSQL data stores are powerful at inserting, updating, querying, searching, and processing data. But the…

Relational databases, data lakes, and NoSQL data stores are powerful at inserting, updating, querying, searching, and processing data. But the ironic aspect of working with data management platforms is they usually don’t provide robust tools or user interfaces to share what’s inside them. They are more like data vaults. You know there’s valuable data inside, but you have no easy way to assess it from the outside.

The business challenge is dealing with a multitude of data vaults: multiple enterprise databases, smaller data stores, data centers, clouds, applications, BI tools, APIs, spreadsheets, and open data sources.

Sure, you can query a relational database’s metadata for a list of tables, stored procedures, indexes, and other database objects to get a directory. But that is a time-consuming approach that requires technical expertise and only produces a basic listing from a single data source.

advertisement

You can use tools that will reverse engineer data models or provide ways to navigate the metadata. But these tools are more often designed for technologists and mainly used for auditing, documenting, or analyzing databases.

In other words, these approaches to query the contents of databases and the tools to extract their metadata are insufficient for today’s data-driven business needs for several reasons:

- The technologies require too much technical expertise and are unlikely to be used by less-technical end-users.

- The methods are too manual for enterprises with multiple big data databases, disparate database technologies, and operating hybrid clouds.

- The approaches are not particularly useful to data scientists or citizen data scientists who want to work collaboratively or run machine learning experiments with primary and derived data sets.

- The strategy of auditing database metadata doesn’t make it easy for data management teams to institute proactive data governance.

A single source of truth of an organization’s data assets

Data catalogs have been around for some time and have become more strategic today as organizations scale big data platforms, operate in hybrid clouds, invest in data science and machine learning programs, and sponsor data-driven organizational behaviors.

advertisement

The first concept to understand about data catalogs is that they are tools for the entire organization to learn and collaborate around data sources. They are important to organizations trying to be more data-driven, ones with data scientists experimenting with machine learning, and others embedding analytics in customer-facing applications.

Database engineers, software developers, and other technologists take on responsibilities to integrate data catalogs with the primary enterprise data sources. They also use and contribute to the data catalog, especially when databases are created or updated.

In that respect, data catalogs that interface with the majority of an enterprise’s data assets are a single source of truth. They help answer what data exists, how to find the best data sources, how to protect data, and who has expertise. The data catalog includes tools to discover data sources, capture metadata about those sources, search them, and provide some metadata management capabilities.

advertisement

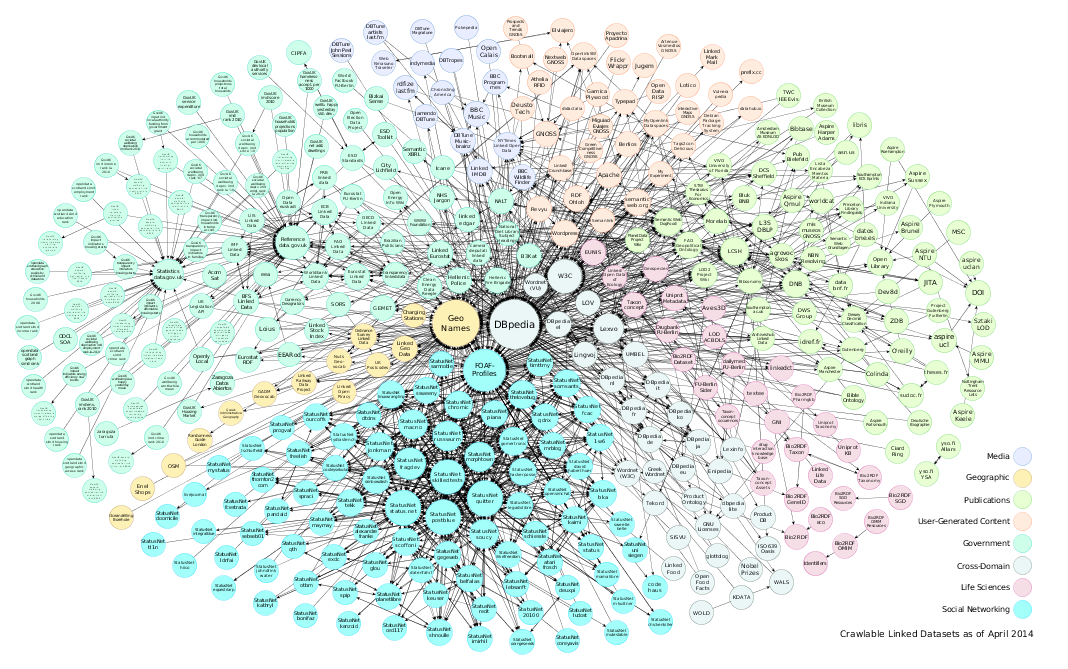

Many data catalogs go beyond the notion of a structured directory. Data catalogs often include relationships between data sources, entities, and objects. Most catalogs track different classes of metadata, especially on confidentiality, privacy, and security. They capture and share information on how different people, departments, and applications utilize data sources.

Most data catalogs also include tools to define data dictionaries; some bundle in tools to profile data, cleanse data, and perform other data stewardship functions. Specialized data catalogs also enable or interface with master data management and data lineage capabilities.

Data catalog products and services

The market is full of data catalog tools and platforms. Some products grew out of other infrastructure and enterprise data management capabilities. Others represent a new generation of capabilities and focus on ease of use, collaboration, and machine learning differentiators. Naturally, choice will depend on scale, user experience, data science strategy, data architecture, and other organization requirements.

Here is a sample of data catalog products:

- Azure data Catalog ans AWS Glue are data cataloging services built into public cloud platforms.

- Many data integration platforms have data cataloging capabilities, including Informatica Enterprise Data Catalog, Talend Data Catalog, SAP Data Hub and IBM Infosphere Information Governance Catalog.

- Some data catalogs are designed for big data platforms and hybrid clouds, such as Cloudera Data Platform and Infoworks DataFoundry which supports data operations and orchestration.

- There are stand-alone platforms with machine learning capabilities, including Unifi Data Catalog, Alation Data Catalog, Collibra Catalog, Waterline Data and IBM Watson Knowledge Catalog.

- Master data management tools such as Stibo Systems and Reltio and customer data platforms such as Arm Treasure Data can also function as data catalogs.

Machine learning capabilities drive insights and experimentation

Data catalogs that automate data discovery, enable searching the repository, and provide collaboration tools are the basics. More advanced data catalogs include capabilities in machine learning, natural language processing, and low-code implementations.

Machine learning capabilities take on several forms depending on the platform. For example, Unifi has a built-in recommendation engine that reviews how people are using, joining, and labeling primary and derived data sets. It captures utilization metrics and uses machine learning to make recommendations when other end-users query for similar data sets and patterns. Unifi also uses machine learning algorithms to profile data, identify sensitive personally identifiable information, and tag data sources.

Collibra is using machine learning to help data stewards classify data. Automatic Data Classification analyzes new data sets and matches to 40 out-of-the-box classifications, such as addresses, financial information, and product identifiers.

Waterline Data has patented fingerprinting technology that automates the discovery, classification, and management of enterprise data. One of their focus areas is identifying and tagging sensitive data; they claim to reduce the time needed for tagging by 80 percent.

Different platforms have different strategies and technical capabilities around data processing. Some only function at a data catalog and metadata level, whereas others have extended data prep, integration, cleansing, and other data operational capabilities.

InfoWorks DataFoundry is an enterprise data operations and orchestration system that has direct integration with machine learning algorithms. It has a low-code, visual programming interface enabling end-users to connect data with machine learning algorithms such as k-means clustering and random forest classification.

We’re in the early stages of proactive platforms such as data catalogs that provide governance, operational capabilities, and discovery tools for enterprises with growing data assets. As organizations realize more business value from data and analytics, there will be a greater need to scale and manage data practices. Machine learning capabilities will likely be one area where different data catalog platforms compete.