advertisement

What’s next for serverless architecture?

Serverless services are everywhere. The driving force behind an evolution towards a new way of programming, serverless offerings come in…

Serverless services are everywhere. The driving force behind an evolution towards a new way of programming, serverless offerings come in all forms and shapes including application hosting platforms, serverless databases, CDNs, security offerings, etc.

Low-level configuration, scaling, and provisioning concerns have been taken away by serverless offerings, leaving distribution as the last remaining concern. Here edge serverless provides a solution by distributing data and compute across many data centers. Edge serverless reduces latency by bringing calculations closer to the user.

Edge serverless is the culmination of an evolution of cloud architectures that began with Infrastructure-as-a-Service nearly 15 years ago. The next stage of that evolution will be to push the distribution of serverless “building blocks” further and make them easier for developers to consume.

advertisement

Let’s take a closer look at where we’ve been and where we’re headed.

Layered architecture

Infrastructure as a Service (IaaS)

advertisement

The cloud computing revolution started in earnest with the advent of Infrastructure-as-a-Service. With IaaS, companies could move their local infrastructure to hosted “cloud” infrastructure and operate from there. They paid only for the storage and compute hours that they used, and did not need to install or manage any hardware or network.

At first, IaaS seemed expensive, yet companies adopted it very quickly because uptime was guaranteed at a level they could not compete with, while the price of buying and maintaining their own infrastructure often surpassed the IaaS offering. The most important advantage was that the cloud removed hardware maintenance and provisioning, which liberated developers to focus on business value.

Platform as a Service (PaaS)

advertisement

Vendors then took cloud computing one step further and offered the Platform-as-a-Service. Instead of renting just a server, a PaaS solution rents you everything you need to build an application. This includes not only the server, but also the operating system, programming language environment, database, and database management tools.

Whereas the IaaS provider makes you the administrator for the servers you rent, the PaaS provider takes over server administration tasks such as software installation or security updates and often attempts to anticipate the environmental requirements of your code. The goal of PaaS is to provide you with a simple way to deploy your application. Going one step further than IaaS, PaaS liberates developers from system admin tasks and allows them to focus on what matters most—the app.

AWS Elastic Beanstalk, Google App Engine, and Heroku are just a few of the providers that offer PaaS to the public.

Software as a Service (SaaS)

Soft-as-a-Service typically refers to online-hosted applications that are available through a variety of subscriptions. The apps operate completely in the cloud and are accessed via the internet and a browser. In essence, every application that runs in the cloud and has a subscription-based pricing model is considered to be a SaaS application.

There are two types of SaaS applications: Those that focus on the end user, and those that focus on developers. The latter type aims to provide a basis for other applications. Gmail, Dropbox, Jira, BitBucket, and Slack are all examples of SaaS applications that focus on the end user, while Stripe and Slaask expose APIs that allow you to integrate their SaaS solution into your own application.

Container as a Service (CaaS)

Container platforms are the latest incarnation of IaaS. Instead of offering full-blown server hosts, CaaS providers let you host your services or applications within containers, and manage the containers on your behalf.

Containers are more efficient at utilizing underlying host resources than virtual machines. One can think of containers as “tiny machines.” They launch quickly, and multiple instances can run on a single server.

CaaS providers offer tools to deploy containers on servers and to scale the number of container instances up and down. The most advanced offerings completely manage the underlying servers for you, allowing your company to focus on the code (or containers) instead of the infrastructure.

CaaS has quickly become one of the building blocks for PaaS and SaaS, resulting in a layered architecture. There has been a shift toward developing applications as high on the pyramid as possible. Many complex applications are still a combination of SaaS, PaaS, and CaaS, since the available platforms are not flexible enough to deliver everything an application needs.

FAUNA

Many complex applications are a combination of SaaS, PaaS, and CaaS.

By relying as much as possible on SaaS, you free yourself from provisioning and scalability concerns. For the remaining parts, companies typically resort to running containers, which means they still have configuration and provisioning concerns.

To reduce these concerns, a fifth offering was created to fill in the gaps. Serverless architecture was born.

Serverless architecture

Function-as-a-Service (FaaS)

FaaS lets you upload and execute code without even thinking about scaling, servers, or containers. In that sense, it surpasses the ease-of-use principles of the previous offerings, but it also has limitations that are less prominent in PaaS.

The biggest advantage of FaaS is scaling. Scaling FaaS can be done at a lower granularity than PaaS or CaaS, and does not require configuration. Also, you do not pay for what you do not use.

- Granularity: PaaS applications typically only scale per application, while applications built on CaaS only scale per container. FaaS applications are broken down into separate functions and therefore scale per function. The downside is that it often requires you to rethink the architecture of your application. Instead of managing one app or a few containers, many functions that carry out smaller tasks have to be managed, which can introduce a lot of orchestration work.

- Configuration: Whereyou would normally configure when to scale (triggers to scale up and down) or manually set how many instances of an application or container that need to be run, FaaS does not require you to configure scaling, or to provision specific resources.

- Pay-as-you-go:Instead of deploying containers (CaaS), which you pay for whether code is actively being executed or not, functions only incur charges when they are called. This pay-as-you-go pricing model is slowly becoming the most important aspect of the definition of serverless.

- Limits: In a typical application, you can define memory limits or execution time limits for your code. Although FaaS providers allow you to configure those, there are upper limits in place to ensure that the provider can effectively optimize these resources. One can imagine that it would be much harder for a provider to estimate how many servers have to be spun up to optimally use their resources if functions can be created with 10GB of RAM or can run for a few hours.

A new edge architecture

Serverless architecture took away provisioning and scaling concerns, but distribution remains a challenging problem. Ideally, we want our code to run as closely to the end user as possible to reduce latency. There are multiple problems with the way we have been building applications until recently:

- Distributing logic: Unless you deploy your functions or containers in different regions, and route the client towards the closest function yourself, your function would typically remain in one data center.

- Distributing dynamicdata: Distributing the logic without distributing the data is not going to reap great rewards because the latency has just moved to a different location. Your user might be closer to the back end, but your back end is still distant from the data layer.

- Cost, configuration, monitoring:It’s rare to see an application that’s distributed to more than two or three regions because doing so typically comes with additional cost or configuration, and requires you to monitor your functions or containers in multiple regions.

The next evolution in serverless is to push the distribution further and deliver it without the need for configuration. This means that our logic and data is distributed across many regions around the globe, effectively minimizing the latency for our end users.

CDNs and Jamstack

We have already been using the most basic form of a service that provides automatic distribution; it’s called a content delivery network, or CDN. A few bright minds, at companies such as Netlify and Zeit, saw that automatic distribution could already be accomplished by pre-generating your app as much as possible, and handling the dynamic parts with serverless functions and SaaS APIs.

This approach, coined “Jamstack” by Netlify, has been gaining popularity rapidly because content delivery networks deliver the first taste of what an edge architecture could offer. Of course, there are limitations to a Jamstack that is based purely on server-side rendering. For instance, builds have to be triggered for new incoming content. This makes it very challenging to apply this approach to highly dynamic websites that have significant build times.

FAUNA

Jamstacks based on server-side rendering are challenged by highly dynamic websites, because builds must be triggered for new content.

Incremental builds and concepts such as client-side hydration offer partial solutions to this problem, but in the end we want our complex websites to have the advantages of both worlds: (very) low latencies for our end users and new content that is accessible immediately.

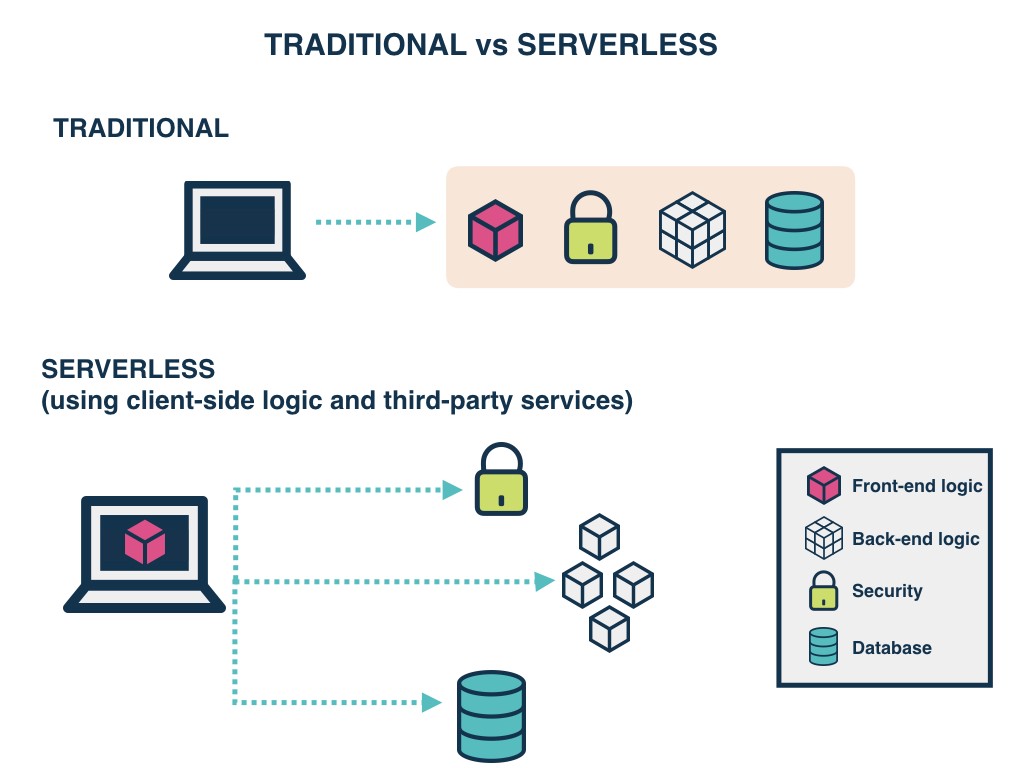

The rise of distributed services

We come from an architecture where the front end communicates with the back end, and the back end in turn communicates with the database and other services. The back end and database are often scaled together to keep latency between the back end and database low. Distribution is possible, but often cumbersome and therefore limited.

FAUNA

Distribution of the back end and database is possible, but often cumbersome and therefore limited.

In future architectures, the ideas of Jamstack will be taken to a new level by using other distributed services. Each of those services will be a distributed network whose nodes will not necessarily have to live in the same data center as other services. To reduce latency to an absolute minimum, the security model has to be rethought in order to let the front end communicate with the database and other service networks.

FAUNA

Future application architectures will take advantage of distributed service networks, distributed database networks, and distributed serverless back ends.

Let’s take a look at the services that are contributing to make this possible.

Distributed service networks

Many SaaS platforms, such as Algolia and SendGrid, aim to become the building blocks for other applications. They develop specific services that remove specific concerns from typical back-end applications. Some of these are evolving to become distributed services, such as Algolia, which calls itself a Distributed Search Network (DSN). Many other Saas platforms will follow and it’s likely that we will soon be talking about Distributed Service Networks as the next evolution of SaaS applications.

Distributed serverless databases

Databases such as Azure Cosmos DB, Google Cloud Spanner and FaunaD are adopting the pay-as-you-go serverless model and providing out-of-the-box distribution with some form of ACID guarantees. Some databases offer security layers and native GraphQL APIs that can be securely consumed by client applications and that play nicely with a serverless back end. Security layers make it possible for user interfaces to interact directly with the database instead of solely with a back end. Ideally, a front-end application can communicate with a globally distributed database with low latencies and ACID guarantees, almost as if the database were running locally.

Distributed serverless edge computing

New serverless functions, such as Cloudflare workers and StackPath Serverless Scripting, are pushing serverless functions to the edge. They aim to bring functions as close to the end user as possible to reduce latency to an absolute minimum. Cloudflare workers have 194 points of presence while StackPath has 45-plus locations.

Why is this new edge architecture gaining traction now? When we consider the evolution of this transformation from IaaS to edge serverless, one stumbling block has always held us back: How to handle dynamic data? Although we’ve had services like Amazon S3 to host relatively static data, real databases have struggled to deliver a serverless experience. That’s because it’s incredibly hard to build a distributed system that is strongly consistent.

FAUNA

The serverless building blocks in the cloud work like Legos. Developers can combine the building blocks they need and stop worrying about scaling or distribution.

Today, we have serverless databases with built-in security that open the door for a new breed of applications—applications that scale in a globally distributed way by default. Since that door has opened, many developers have begun looking for ways to replace parts of their back end with microservices and APIs, opening up a new market for many SaaS providers.

The end result will be an ecosystem of building blocks that work like Legos. Soon developers will combine the building blocks they need and stop worrying about scaling or distribution.

Brecht De Rooms is senior developer advocate at Fauna. He is a programmer who has worked extensively in IT as a full-stack developer and researcher in both the startup and IT consultancy worlds. It is his mission to shed light on emerging and powerful technologies that make it easier for developers to build apps and services that will captivate users.